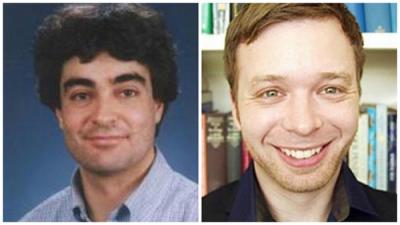

2015 Recipients

Dr. Aleix Martinez and Dr. Dylan Wagner

Neuroimaging and video databases for the study of naturalistic vision, language processing and social cognition

Over the last several years there has been an increasing trend towards the use of naturalistic stimuli in cognitive neuroscience. For example, recent research has relied on natural scenes, videos, audio stories and popular narrative films in order to understand how the brain represents a host of phenomenon such as facial expressions, memory, semantics and social cognition. The database being developed consists of a combination of validated and annotated stimulus sets for the study of social behavior, facial expressions, identity as well as high-level social cognition along with associated functional neuroimaging data gathered as participants view these stimulus sets. This resource will consist both of carefully annotated video stimuli of intentional actions suitable for computational modeling as well as naturalistic stimuli in the form of audio stories and audiovisual movies. Together with the associated neuroimaging data, this resource will enable further research in computer vision and cognitive neuroscience focused on questions regarding the discriminative features of different social behaviors, their neural representation and the possibility of building models to predict social inferences during naturalistic experience.

With regards to the video stimulus database, there is no precedent in the computer vision literature. The closest databases are small collections of actions such as running and shaking hands (Zhao & Martinez, 2015; Dollar et al., 2005; Park & Aggarwal, 2004), without concern of intent as a variable of study. This stimulus set will be the first that will allow a systematic study of the image features that lead to the visual interpretation of social behavior.

The second part of this resource, focusing on natural viewing of audio and audiovisual narratives is, by design, similar to the Study Forrest project (studyforrest.org) a publicly available 7-Tesla fMRI dataset of twenty persons watching the movie Forrest Gump and is being developed in consultation with the authors of that project. Our resource aims to complement and extend this project by collecting responses to multiple audiovisual and auditory narratives and by explicitly focusing on the social domain. By collaborating rather than competing with the StudyForrest project, we aim to enhance the usefulness of both datasets by allowing for the principled comparison of data from both resources across language (English for our project, German for StudyForrest) and media types. To enable this cross-project comparison, we propose to collect a subset of the movie Forrest Gump in order that we may use functional alignment algorithms for fMRI (e.g., Haxby et al. 2011), to bring the entirety of the CCBS and StudyForrest datasets into functional alignment with one another. This would enable cross-dataset comparisons of fine-grained neural response to variety of natural stimuli and enable researchers to take advantage of any predictive models built on the CCBS dataset and test these on the StudyForrest dataset and vice versa.

Across the entire resource, our overarching goal is to capitalize on cross-dataset model building using computer vision and machine learning methods to, for instance, analyze the neural patterns associated with different features of the social intentions in order to attempt to “annotate” the intentions of characters in complex natural movies and non-visual auditory narratives.

Although the focus of this research is on social perception, users of this resource are by no means limited to this domain. As we will be releasing all stimulus materials and neuroimaging datasets with a generous license, researchers in vision, memory and auditory perception will be able to use this resource in new and creative ways to answer questions in their respective domains. Moreover, scientists working in the field of computer vision and neuroinformatics will be able to mine this dataset for the development of novel algorithms or to use as a dataset for validating different models of perceptual or cognitive function.

The resources being developed and made publicly available are cutting edge. As researchers are trying to move away from the study of faces and human body movements and into a more complex analysis of social behavior one of the major hurdles limiting research in these areas is the lack of well annotated databases. The databases and computer vision and fMRI studies in this resource will allow researchers from inside and outside the CCBS to pursue cutting edge research on these highly significant and challenging novel scientific problems. It is our hope that these stimulus and neuroimaging databases will provide a valuable resource for the greater scientific community and to the growing open-science movement.

To learn more about Dr. Martinez's and Dr. Wagner's research, visit their websites: